What is prompt engineering? A practical guide

Prompt engineering is really just the art of talking to an AI. It's about crafting the right instructions—or "prompts"—to get a Large Language Model (LLM) to give you exactly what you want. This isn't about coding. It's about becoming a master communicator who knows the perfect way to ask for something to get back accurate, relevant, and genuinely creative results.

Think of it as learning the AI's language so you can get the best possible answer every single time.

The Art of Communicating with AI

Ever asked an AI like ChatGPT a question only to get a generic, unhelpful, or flat-out wrong answer? We’ve all been there. That frustration is the gap between what we want the AI to do and what we actually told it to do. Prompt engineering is the skill that bridges that gap.

Imagine an AI model is an enormous library containing nearly all of human knowledge. A prompt engineer is the expert librarian who knows the precise way to phrase a request to find the perfect piece of information. Instead of just asking, "Tell me about cars," the expert asks, "Explain the principles of an internal combustion engine to a 12-year-old using a bicycle pump as an analogy." The quality of the input directly shapes the quality of the output. It’s that simple.

More Than Just Asking Questions

While it might start with simple questions, real prompt engineering is a strategic game. It’s a mix of logic, a splash of creativity, and a solid understanding of how these AI models "think" and process language. To get a handle on what this discipline is all about, it helps to break down the different hats a prompt engineer wears. And if you want to go back to basics, check out our insider's guide to https://promptden.com/blog/what-does-prompt-mean-an-insiders-guide-to-ai-html.

At its core, prompt engineering is about structuring your instructions to minimize ambiguity and maximize clarity. It’s the difference between giving a painter a blank canvas and giving them a detailed blueprint for a masterpiece.

And this skill isn't just for developers anymore. Marketers, writers, designers, and business analysts are all figuring out that a well-crafted prompt can seriously boost their productivity and the quality of their work.

To really illustrate the point, let's break down the multifaceted role of a prompt engineer into a few key functions. Each one is like a different specialty, but they all work together.

Core Roles in Prompt Engineering

| Role | Description | Analogy |

|---|---|---|

| The Architect | Designs the structure and format of the prompt to ensure a reliable and consistent output. | A chef writing a detailed recipe that anyone can follow to get the same delicious result every time. |

| The Translator | Converts a complex human goal into a clear, concise instruction that an AI can easily understand and execute. | A diplomat translating nuanced human intent into a language the other party will perfectly comprehend. |

| The Optimizer | Iteratively tests and refines prompts, making small adjustments to achieve significantly better performance. | A mechanic fine-tuning an engine to extract maximum power and efficiency from every component. |

This process of constant discovery and refinement is what makes prompt engineering such a fascinating and fast-moving field.

For a deeper dive, our friends over at Richly AI have an entire Prompt Engineering category packed with articles and resources. It’s a great place to keep learning.

How We Learned to Talk to AI

To really get why prompt engineering is such a big deal now, we have to rewind the clock a bit. This isn't a skill that just popped up out of nowhere. It was born from a massive shift in how we work with artificial intelligence. For a long time, getting an AI to do something new was a slow, expensive grind that involved fine-tuning it with huge, custom-built datasets.

Then 2020 happened. When OpenAI dropped GPT-3 on the world, it wasn't just another incremental upgrade—it was a seismic event that changed how we communicate with AI forever. It introduced a powerful concept that became the very foundation of modern prompt engineering.

The Birth of Few-Shot Learning

The game-changing idea that GPT-3 brought to the table was few-shot learning. This is really where prompt engineering begins. Researchers discovered that massive language models could figure out what you wanted from just a handful of examples provided right there in the prompt. This flipped the old methods on their head. You no longer needed massive labeled datasets, as the groundbreaking 2020 paper "Language Models are Few-Shot Learners" detailed. You can get a great breakdown of this shift at AI-Supremacy.com.

Suddenly, instead of retraining an entire model, anyone could "teach" it on the fly. This was huge. The instructions you fed the model—the prompt—became the main way to steer it.

Think of it like this: Before 2020, teaching an AI a new skill was like sending it to college for four years. After GPT-3, it became more like giving it a 10-minute briefing before a meeting.

This new accessibility blew the doors wide open. Anyone who could write clearly could now direct one of the most powerful tools ever built. The focus shifted from dense code to creative, logical conversation.

From Simple Commands to Strategic Dialogue

Once this breakthrough happened, the evolution of prompting techniques hit the accelerator. What started as simple instructions has blossomed into a sophisticated craft. Early on, users just made basic requests. But as the models got smarter, so did the strategies for talking to them.

You can see this evolution play out across a few key stages:

- Zero-Shot Prompting: The most basic form. You just ask the AI to do something without any examples, like "Translate 'hello' to Spanish."

- Few-Shot Prompting: The next level up. You give the AI a few examples in the prompt to show it the style and format you want in the answer.

- Chain-of-Thought (CoT) Prompting: A more advanced move where you ask the AI to "think step-by-step." This gets it to break down complicated problems into smaller, logical chunks, which seriously boosts its reasoning accuracy.

Each of these steps made AI more reliable and capable of handling tougher and tougher challenges. Understanding what is prompt engineering became less about asking simple questions and more about designing a thoughtful, structured conversation. The simple command had evolved into a strategic dialogue, paving the way for the powerful principles and workflows that experts rely on today.

Core Principles for Effective Prompts

Knowing what prompt engineering is is a great start, but actually putting it into practice is a whole different ballgame. To get consistently great results from an AI, you need more than a simple question. You need a framework.

A killer prompt isn't about stumbling upon some "magic word." It's about building a clear, targeted instruction that leaves zero room for misinterpretation. This is where the core principles come in. Think of them as the four pillars holding up every effective AI instruction you'll ever write.

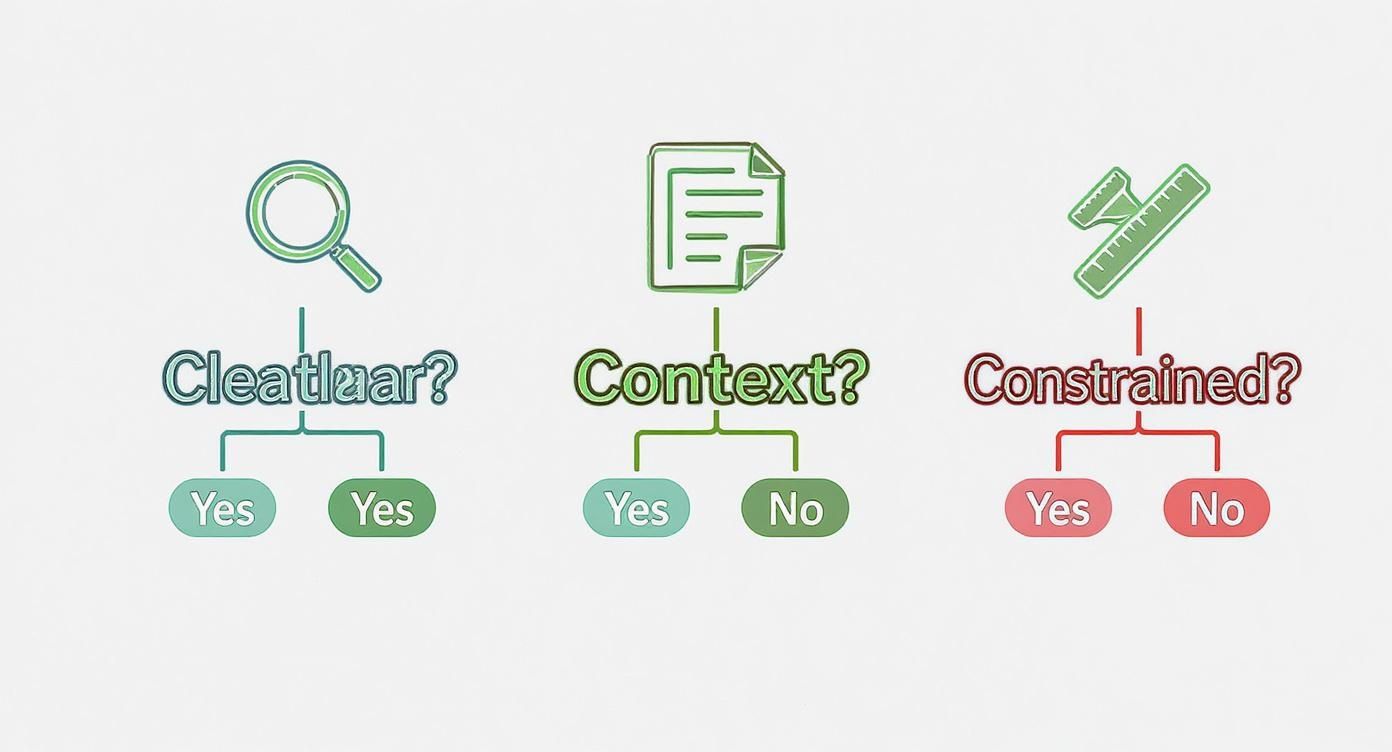

The Four Pillars of a Powerful Prompt

At its heart, a well-structured prompt gives the AI four key pieces of information. Each one adds a layer of detail, sharpening the focus until the model has a crystal-clear picture of what you want. These pillars are Clarity, Context, Constraints, and Persona.

Let's break down each one and see how they work together.

Pillar 1: Clarity

The first and most important principle is clarity. Your instructions have to be direct and unambiguous. The AI doesn't pick up on hints or unspoken expectations; it only knows the words you give it. Vague language gets you vague, unhelpful answers.

For instance, a beginner might start with something like this:

- Before: "Write about marketing."

This is way too broad. What kind of marketing? For what kind of business? In what format? The AI is forced to guess, and you’ll likely get a bland, generic overview.

A clearer prompt makes an immediate difference:

- After: "Write a blog post about low-cost social media marketing tactics."

Much better. It specifies the topic ("low-cost social media marketing") and the format ("blog post"). The instruction is direct, leaving a lot less to chance.

Pillar 2: Context

Next up is context. This is the background info the AI needs to understand the "why" behind your request. Giving context helps the model align its response with your specific situation, audience, or goals. It's the difference between an answer that's technically correct and one that's genuinely useful.

Building on our last example, we can add context to make the output even more relevant.

By providing context, you are essentially giving the AI a lens through which to view your request. This helps ensure the final output is not just accurate, but also appropriate for your specific needs.

Here’s how we can add a layer of context to our marketing prompt:

- With Context: "Write a blog post about low-cost social media marketing tactics for a small bakery that is trying to attract local customers."

Now the AI knows it's writing for a small bakery whose main goal is to get local customers in the door. This context will shape every tactic it suggests, making sure they're perfect for a small, local shop.

Pillar 3: Constraints

Constraints are the rules and boundaries you put on the output. They define the "how," including things like length, tone, style, and structure. Without them, the AI might spit out a 2,000-word essay when all you needed was a quick paragraph.

Here are a few common constraints you can use:

- Word Count: "in 500 words" or "in a single paragraph."

- Tone of Voice: "use a friendly and encouraging tone" or "write in a professional, authoritative voice."

- Format: "present the answer as a numbered list" or "format the output in a markdown table."

Adding constraints to our prompt gives us even more control:

- With Constraints: "Write a 500-word blog post about low-cost social media marketing tactics for a small bakery trying to attract local customers. Use a friendly and encouraging tone, and end with a call to action inviting readers to visit the bakery."

Pillar 4: Persona

Finally, assigning a persona tells the AI who it should be. This is a powerful move that instructs the model to adopt the voice, knowledge, and perspective of a specific expert or character. It’s like casting an actor for a role.

Instead of getting info from a generic AI, you can get it from an expert content strategist, a seasoned financial advisor, or even a witty comedian.

Let's add the last pillar to complete our prompt's transformation:

- With Persona: "Act as an expert content strategist specializing in local business growth. Write a 500-word blog post about low-cost social media marketing tactics for a small bakery trying to attract local customers. Use a friendly and encouraging tone, and end with a call to action inviting readers to visit the bakery."

By weaving these four principles—Clarity, Context, Constraints, and Persona—together, we've engineered a prompt that is specific, targeted, and incredibly effective. We went from a fuzzy idea to a precise set of instructions that will reliably get us a high-quality, relevant, and useful response. That's the core of mastering prompt engineering.

Essential Prompt Engineering Techniques

Once you get the hang of the basic principles behind a good prompt, you can start digging into more specific, powerful techniques to handle complex jobs. These aren't just small adjustments; they're proven methods that engineers use to steer AI models toward much more accurate and logical results. Think of them as different tools in a workshop—each one is built for a specific purpose.

Learning these methods is what separates a casual user from someone who can reliably get high-quality outputs from an AI. We'll walk through three of the most foundational techniques: Zero-Shot, Few-Shot, and the incredibly useful Chain-of-Thought prompting. Each one unlocks a different level of what the AI can do for you.

Zero-Shot Prompting: The Direct Command

The simplest and most direct approach is Zero-Shot Prompting. In fact, this is probably how you first started talking to an AI. You give the model a straight command or ask a question without giving it any examples of what a good answer looks like.

It's like asking a talented chef to make you a peanut butter and jelly sandwich, assuming they've never heard of one. Based on their huge knowledge of food, they can probably piece it together and give you something pretty close to what you wanted.

For example, a simple zero-shot prompt is:

"Classify the following customer feedback as Positive, Negative, or Neutral: 'The shipping was fast, but the product arrived damaged.'"

The model has to lean entirely on its existing training to figure out the task and spit out an answer. It’s fast and simple, making it perfect for straightforward tasks where the AI already has a solid understanding of the concept.

To get the most out of any prompt, it helps to think through the key ingredients of a strong instruction.

This little decision tree shows how layering in more detail—like context and constraints—is a systematic way to improve your odds of getting a great response from the AI.

Few-Shot Prompting: Learning by Example

But what happens when a task is more nuanced or you need the output in a very specific format? That's when zero-shot prompting starts to fall short. This is where Few-Shot Prompting shines. With this technique, you give the AI a few examples (the "shots") of the task done correctly, right inside the prompt itself.

This is like showing that same chef three pictures of perfect peanut butter and jelly sandwiches before asking them to make one. After seeing the examples, they have a much clearer picture of the structure, style, and final product you’re looking for.

Sticking with our customer feedback task, a few-shot prompt would look something like this:

- Feedback: "I love the new design!" Sentiment: Positive

- Feedback: "The app keeps crashing on my phone." Sentiment: Negative

- Feedback: "The user interface is okay." Sentiment: Neutral

- Feedback: "The shipping was fast, but the product arrived damaged." Sentiment:

By showing the model these examples, you're essentially teaching it the exact pattern you want it to follow. This trick dramatically improves accuracy for things like classification, formatting, or getting the tone just right. You can dive deeper into this powerful method by mastering Few-Shot prompting for better AI results.

Chain-of-Thought Prompting: Showing Your Work

For problems that involve logic, math, or any kind of multi-step reasoning, even few-shot prompting can fail. The AI might try to jump straight to the conclusion and make a simple mistake along the way. Chain-of-Thought (CoT) Prompting fixes this by telling the model to "think step-by-step" and lay out its reasoning process.

Instead of just providing the final answer in your examples, you show the AI how you got to the solution. It's the classic difference between telling a student the answer to a math problem is 19 and actually showing them the equation and how you solved it.

Chain-of-Thought prompting is a complete game-changer for complex reasoning tasks. It forces the model to slow down and write out its thought process, which often leads to a 30-40% improvement in accuracy on logic-based problems.

Take this simple logic puzzle:

- A So-So Prompt: "John has 5 apples. He gives 2 to Sarah and then buys 3 more. How many apples does he have?"

- A CoT Prompt: "John starts with 5 apples. He gives 2 to Sarah, which leaves him with 5 - 2 = 3 apples. Then he buys 3 more, so he now has 3 + 3 = 6 apples. Based on this, how many apples does John have now?"

By showing the reasoning, you guide the AI down a logical path instead of letting it guess. This technique has quickly become a cornerstone of advanced prompt engineering.

Choosing the right technique is all about matching the tool to the job. The table below breaks down these core methods to help you decide which one to pull out of your toolbox.

Comparison of Prompting Techniques

| Technique | Core Concept | Best Use Case | Example Snippet |

|---|---|---|---|

| Zero-Shot | Give a direct instruction with no examples. | Simple, well-defined tasks like summarization or direct questions. | "Summarize this article." |

| Few-Shot | Provide a few examples of the desired input/output format. | Classification, data extraction, or tasks requiring a specific style. | "Translate to French:\nsea otter -> loutre de mer\npeppermint -> menthe poivrée\ncheese ->" |

| Chain-of-Thought (CoT) | Show the model how to reason through a problem step-by-step. | Math problems, logic puzzles, and multi-step reasoning tasks. | "Q: Roger has 5 tennis balls... A: Roger started with 5 balls... he has 5 + 2 = 7 balls." |

Each technique builds on the last, giving you more control and precision. By mastering Zero-Shot for speed, Few-Shot for specificity, and CoT for complex reasoning, you can dramatically elevate the quality and reliability of your AI outputs.

Prompt Engineering in the Real World

It’s one thing to talk about the principles of prompt engineering, but it’s another thing entirely to see it making a real impact. This isn't just theory. It's a hands-on skill that people are using right now to solve actual business problems, get more done, and achieve better results.

From the marketing department to the dev team, a well-written prompt is the critical link between a human goal and an AI's ability to execute it. Let’s jump into a few scenarios where smart prompting makes all the difference.

Crafting On-Brand Marketing Copy

Imagine a marketing team for a sustainable fashion brand. They need some fresh ad copy for a new line of recycled jackets. A lazy, generic prompt would spit out something bland—the kind of text that fails to grab attention or connect with the brand's mission.

But an expert prompt engineer knows better. They’ll build a detailed instruction that nails the core principles: clarity, context, constraints, and persona.

Example Marketing Prompt:

Act as a senior copywriter for a mission-driven, eco-conscious fashion brand. Your tone is optimistic, passionate, and slightly rebellious. Write three variations of Facebook ad copy for our new jacket made from 100% recycled materials. Each variation must be under 50 words, include an emoji, and end with a call to action to "Shop the Revolution."

See the difference? This prompt doesn't just ask for "ad copy." It tells the AI who to be, what rules to follow, and how to align with the brand's soul. The result is sharp, targeted copy that actually resonates with the right people, saving the team hours of painful brainstorming sessions.

Accelerating Software Development

For developers, prompt engineering is quickly becoming a secret weapon for productivity. Let's say a software engineer is banging their head against the wall trying to debug a tricky Python function. Instead of spending hours staring at the screen, they can bring in an AI model as a partner.

A vague "Why is my code broken?" won't get them very far. A structured prompt, packed with context, is the key.

- Code Snippet: First, they paste the exact function that’s causing trouble.

- Error Message: Then, they include the specific error message the compiler is throwing.

- Desired Outcome: They clearly explain what the function is supposed to do.

- The Ask: Finally, they request a step-by-step breakdown of the error and a corrected version of the code, complete with comments.

This approach turns the AI from a simple search tool into a genuine coding assistant. The model can analyze the code in context, spot the flaw, and offer a real solution, slashing debugging time.

Powering Intelligent Customer Support

A customer support team wants to build a chatbot that does more than parrot FAQs. They need it to solve real problems with a human touch. That requires some sophisticated prompt design to guide the bot’s behavior, especially in tricky situations.

A customer is reporting that their recent order arrived with a damaged item. Your role is an empathetic and efficient support agent. First, apologize sincerely for the issue. Second, ask for the order number and the specific damaged item. Third, offer two solutions: a full refund or a free replacement shipment. Maintain a patient and helpful tone throughout the interaction.

This detailed, role-playing prompt gives the AI a clear script to follow. It ensures the chatbot responds consistently, follows a logical workflow, and maintains the company’s supportive tone. This leads directly to happier customers and frees up human agents to focus on the truly complex cases.

Of course, prompt engineers and power users often rely on tools to make their lives easier. You can discover some useful AI Chrome extensions that help in these kinds of real-world scenarios. Ultimately, these examples prove that understanding what is prompt engineering is about more than just definitions—it's about mastering a skill that delivers tangible business value.

Why Prompting Is a High-Value Career Skill

Okay, so we know what prompt engineering is, but the real story is its explosive value in the job market. As companies scramble to weave AI into everything they do, the ability to talk to these powerful models effectively has become a make-or-break skill. This isn't just some niche tech role anymore; it’s a core competency for anyone working in the modern economy.

Organizations are pouring money into finding this talent for one simple reason: better prompts mean better business. A well-written instruction directly impacts the quality of AI-generated content, makes automation more efficient, and creates a much smoother experience for users. The prompt engineer is the critical link between what a human wants and what a machine does.

The Rise of the Prompt Engineer

The demand for people who have mastered this human-AI conversation has gone through the roof. Between 2021 and 2023, the generative AI boom created a massive vacuum for prompt engineering specialists. Job postings shot up, with some listings offering eye-watering salaries as high as $335,000 per year. That number alone tells you just how critical this skill has become. You can learn more about these industry salary trends.

This incredible growth points to a fundamental change in the tech world. The big value isn't just in building AI models anymore, but in applying them with skill and precision. A good prompt engineer ensures an AI model's immense potential doesn't go to waste, preventing costly mistakes, bland outputs, and burned server resources. They are, in essence, the quality control experts for the AI generation.

Responsibilities of a Modern Prompt Engineer

So, what does a prompt engineer actually do all day? It's a fascinating mix of creative, analytical, and technical work that sits right at the intersection of language, logic, and code. Their day-to-day responsibilities often include:

- Designing and refining prompts to make sure AI outputs are accurate, on-point, and match the company's voice.

- Building out prompt libraries and creating best-practice guides that other teams can use.

- Constantly testing and tweaking prompts to get the best performance for specific tasks, whether it’s writing marketing emails or generating Python code.

- Working alongside developers and product managers to build smart prompting strategies directly into AI-powered apps.

Think of the prompt engineer as a translator. They take complex business needs and convert them into a language that machines can understand and act on perfectly. They are the key to unlocking the real, practical value of artificial intelligence.

At the end of the day, getting good at prompt engineering is about much more than just chatting with an AI. It's a strategic skill that helps companies get the biggest bang for their buck from their massive AI investments. For anyone looking to build a future in this field, digging into a solid artificial intelligence learning path is the perfect place to start.

Common Questions About Prompt Engineering

As you start wrapping your head around this whole “prompt engineering” thing, it’s totally normal for some practical questions to pop up. This skill lives at the intersection of language and logic, and it can seem more complicated than it really is. Let’s clear up some of the usual queries to give you the confidence to jump right in.

Probably the biggest question we hear is whether you need to be a coder or have a super technical background. The short answer is a definite no. While developers absolutely use prompting for coding tasks, the core skill is communication, not programming.

If you can write clear, logical instructions, you already have the foundation to become a great prompt engineer. In this game, creativity and clarity are way more important than knowing Python.

Do Different AI Models Need Different Prompts?

Yes, 100%. Prompting is not a one-size-fits-all kind of deal. How you talk to an AI model depends heavily on its unique architecture and the data it was trained on. A prompt that works perfectly with OpenAI's GPT-4 might give you a bland—or completely different—result with a model like Google's Gemini or Anthropic's Claude.

- GPT-4 often shines when you give it highly detailed, persona-driven prompts and ask it to perform complex reasoning.

- Claude is well-known for handling long documents and has a more "constitutional" approach to safety, which can definitely influence its tone.

- Image models like Midjourney have their own unique syntax, using special commands like

--ar 16:9to control the aspect ratio. That’s a whole different language compared to text models.

The main takeaway here is to treat each AI model like an individual. A huge part of mastering prompt engineering is learning their quirks, strengths, and preferred communication styles. What works like a charm today might need a little tweaking for the next model that comes along tomorrow.

What Is the Best Way to Start Learning?

Honestly? The best way to learn is by doing. Don't get bogged down reading endless theory. Just open up a tool like ChatGPT, Gemini, or a specialized platform and start messing around.

Start with simple, direct commands and then slowly build up the complexity. Take a basic request and start layering in the principles we've covered: add more context, set some clear rules, and give the AI a persona to act as.

Pay attention to how each little addition changes the output. This hands-on, iterative process of testing and refining is where the real learning happens. Before you know it, you'll develop an intuition for how to guide the AI exactly where you want it to go.

Ready to put theory into practice? PromptDen is the perfect place to start. You can discover thousands of high-quality prompts for just about any task, connect with a community of fellow creators, and find all the inspiration you need to master the art of AI communication. Explore our platform and start crafting better prompts today at https://promptden.com.