what is retrieval augmented generation? A Practical Guide

Retrieval Augmented Generation, or RAG, is a clever framework that gives a Large Language Model (LLM) access to an external, up-to-the-minute knowledge source. Think of it as giving the AI an open-book test instead of a closed-book one.

It works by first retrieving relevant information from your own data and then feeding that context to the LLM to help it generate a much more accurate, detailed, and trustworthy answer. This simple but powerful approach is one of the best ways to stop AI models from just making things up.

What Is Retrieval Augmented Generation

Imagine a brilliant historian who has memorized every single book in a gigantic library. Their knowledge is immense, but the library doors are sealed. Everything they know is frozen in time.

RAG is like giving that historian a key to a live, constantly updated digital archive. Before answering any question, they can now look up the very latest research, data, and facts.

In short, RAG isn't a totally new kind of AI model. It’s a powerful framework that pairs the incredible reasoning ability of an LLM with the factual, real-time recall of an external data source. This combination directly tackles two of the biggest headaches in AI today: outdated knowledge and fabricated answers, often called "hallucinations."

This approach first emerged as a breakthrough in 2020, addressing the huge limitation of LLMs being stuck with static training data. Before RAG, a model trained in 2022 would have no idea about events in 2023, leading to wrong answers in fast-moving fields like finance or healthcare. You can get a deeper look into the current state of Retrieval Augmented Generation on ayadata.ai.

Let's quickly compare what this looks like in practice.

Standard LLM vs RAG-Powered LLM At a Glance

The table below breaks down the fundamental differences between a standard LLM operating on its own and one that's been enhanced with a RAG framework.

| Feature | Standard LLM | RAG-Powered LLM |

|---|---|---|

| Knowledge Source | Internal, static training data | External, dynamic data sources |

| Knowledge Cutoff | Yes, fixed at the time of training | No, always current |

| Hallucinations | Higher risk of making things up | Significantly lower risk |

| Transparency | "Black box" - hard to trace answers | Can cite sources for its answers |

| Cost to Update | Requires expensive full retraining | Inexpensive - just update the data |

| Use Cases | General knowledge, creative writing | Enterprise search, customer support |

As you can see, RAG doesn't just improve an LLM; it makes it a far more reliable and practical tool for real-world business needs.

How RAG Solves Key AI Problems

By grounding every response in verifiable, real-time information, RAG makes AI outputs dramatically more reliable. The benefits are huge:

- Combats Hallucinations: It forces the LLM to base its answers on the facts you provide, drastically reducing the odds it will invent information.

- Ensures Current Knowledge: The external data source can be updated as often as you like, giving the AI access to the very latest information without costly and time-consuming retraining.

- Improves Trust and Transparency: A good RAG system can cite the specific sources it used to generate an answer, allowing users to easily verify the information for themselves.

The entire process hinges on crafting effective queries to pull the right data, a skill that sits at the very heart of prompt engineering. To learn more about this crucial skill, check out our practical guide on what prompt engineering is and how it all works. Ultimately, this framework is what makes AI dependable enough for serious business applications.

How The RAG Process Works Step By Step

To really get what retrieval-augmented generation is, you need to peek under the hood. So, let's walk through how a RAG system goes from a simple user question to a high-quality, informed answer. The whole thing is a surprisingly elegant, two-stage workflow.

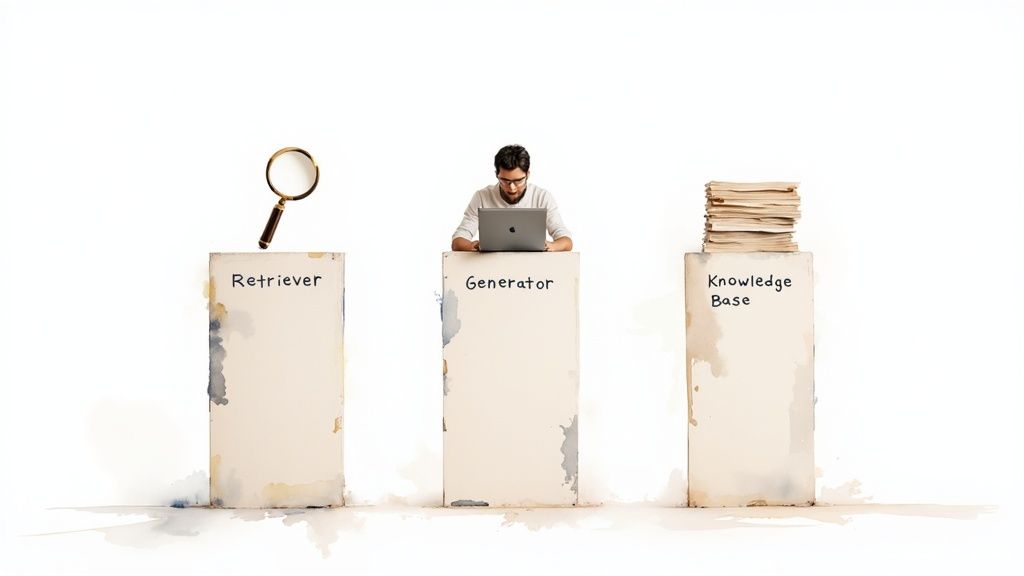

This infographic breaks down the core RAG process, showing how an LLM (the brain) gets paired with external data (the knowledge) to create a much smarter system.

This visual really captures the "Retrieve, then Generate" cycle. This simple loop is the secret sauce behind RAG's power and what makes its architecture so darn effective. It all starts when you ask a question, which kicks off the first critical phase.

Phase 1: The Retrieval Stage

The second you ask a question, the Retriever component jumps into action. Picture it as a super-fast, laser-focused research assistant. Its only job is to hunt down the most relevant bits of information from a connected knowledge base—this could be anything from a company's private documents to a massive industry database.

This isn't your average keyword search, though. The system first converts your question into a numerical representation called a vector embedding. It then uses this vector to scan the knowledge base for chunks of content that have a similar meaning, not just matching words.

Behind the scenes, this is often powered by specialized vector databases that use approximate nearest neighbor (ANN) algorithms. These systems can deliver retrieval speeds up to 10x faster than older methods, all without losing much accuracy. As these retrieval techniques get better, the system gets smarter at filtering out the noise, making sure only the best information makes it to the next step. You can read a bit more about the evolution of RAG technology from Coralogix.

The result of this first stage is a neat package of highly relevant text snippets, facts, and data points, all directly related to your initial query. This curated info is now ready to be passed on.

Phase 2: The Generation Stage

Once the retriever has gathered up the best facts, it bundles them together with your original question. This complete package—your query plus all that fresh context—is then handed off to the Generator. The Generator is the Large Language Model (LLM) itself, like a model from OpenAI or Google.

Now, instead of just trying to answer from its static, pre-trained memory, the LLM has a rich set of specific, timely, and factual information to work with. It uses its incredible language skills to synthesize all of it—the question and the retrieved data—into a single, coherent, and accurate answer.

The core idea is simple but powerful: Don't just ask the model to remember something. Instead, give it the information it needs and ask it to reason based on what's right in front of it.

This elegant "Retrieve, then Generate" process is the key to why RAG is so effective. It lets the LLM produce answers that are not only well-written but are also grounded in real, verifiable data. This makes the whole system more trustworthy, transparent, and capable of tackling very specific or up-to-the-minute topics that a standard LLM would otherwise fumble.

The Three Pillars Of A RAG System

To really get what retrieval-augmented generation is all about, it's helpful to break it down into its three core building blocks. Each piece has a distinct and vital role in the workflow, and they all work together like a specialized team to cook up accurate, context-aware answers.

Think of it like an expert research team: you have a librarian, a writer, and the library itself.

This simple structure is exactly what makes the RAG framework so powerful and flexible. Let's dig into how each of these pillars contributes to the final result.

The Retriever: The Search Specialist

First up is the Retriever. This is the system's search engine, acting as the team's incredibly diligent librarian. When a user asks a question, the retriever's job is to instantly scan the entire connected knowledge base to find the most relevant snippets of information.

It doesn’t just hunt for keywords. Instead, it uses sophisticated vector search techniques to find content that is conceptually similar to the user’s question. The quality of what it finds is absolutely critical—if the retriever pulls up irrelevant or junk data, the final answer will be just as bad.

The Generator: The Language Expert

Next, we have the Generator. This is the Large Language Model (LLM) itself—think of a model from OpenAI, Google, or Anthropic. In our analogy, the generator is the team’s skilled writer. It receives the original user query along with all the relevant facts and snippets the Retriever just handed over.

Its job isn't just to parrot back this information. The generator's real talent is synthesizing it all. It uses its advanced grasp of language, context, and reasoning to weave the retrieved data into a coherent, human-like, and accurate response. The LLM's ability to seamlessly blend external facts into a natural-sounding answer is what makes the RAG process feel so intelligent.

A RAG system's strength comes from this partnership: the Retriever provides the facts, and the Generator provides the fluency and reasoning to make sense of them.

The Knowledge Base: The Source of Truth

The final—and arguably most important—pillar is the Knowledge Base. This is the library itself, the source of truth where the Retriever goes to find information. At the end of the day, a RAG system is only as good as the data it can access.

This knowledge base can be almost anything:

- Simple Document Collections: A folder full of PDFs, Word documents, or plain text files.

- Structured Databases: SQL databases or internal company wikis with neatly organized data.

- Proprietary Data: A company's private collection of support tickets, product manuals, and internal reports.

By plugging into their own private data, businesses can create incredibly powerful, specialized AI assistants that understand their operations inside and out. But this means that maintaining a high-quality, up-to-date repository is non-negotiable. You can explore some best practices for knowledge management in 2025 to see what it takes to keep this data reliable and effective enough for a RAG system to use.

Why RAG Is a Game Changer for AI Applications

So, what's all the buzz about? Why are developers and businesses suddenly zeroing in on retrieval-augmented generation? The excitement isn't just hype; it comes from a handful of powerful benefits that tackle the biggest weaknesses of standard Large Language Models head-on.

Think of it this way: RAG turns an AI from a brilliant student who relies only on memorized textbooks into an expert researcher with a live, constantly updated library at their fingertips. This simple shift makes AI applications dramatically more practical, trustworthy, and useful in the real world.

Drastically Improved Accuracy

The single biggest win with RAG is how it slashes AI "hallucinations." We've all seen models confidently invent facts out of thin air. RAG puts a stop to that. By forcing the LLM to base its answers on specific information it just pulled from a trusted knowledge base, its ability to make things up is massively reduced.

Its adoption enables models to cite their information sources, enhancing transparency and reducing AI hallucinations, a known problem where chatbots produce fabricated or misleading answers.

This grounding in factual data means the answers aren't just plausible—they're verifiable. For any business, that's the crucial difference between a fun novelty and a reliable tool you can actually depend on.

Access to Current Information

Standard LLMs are like a photograph—their knowledge is frozen at the moment their training data was collected. RAG completely shatters that limitation. By plugging into an external, dynamic knowledge base, the AI can access and use information that's just seconds old.

This is absolutely essential for any application that needs up-to-the-minute data, like:

- Financial analysis bots that require the latest market data.

- Customer support agents that need to reference the newest product guides.

- News summary tools that have to report on breaking events as they happen.

This ability to tap into real-time information is similar to how you can connect ChatGPT to the internet for seamless usage, ensuring the AI's knowledge never goes stale.

To put these improvements into perspective, let's look at some numbers. The table below shows the typical impact seen across key AI performance metrics when RAG is implemented, based on various industry reports and case studies.

Impact of RAG on Key AI Performance Metrics

| Metric | Improvement Range with RAG |

|---|---|

| Factual Accuracy | 50-70% reduction in hallucinations |

| Response Relevance | 40-60% improvement in relevance to query |

| User Trust Score | 30-50% increase in user trust ratings |

| Knowledge Update Costs | 60-80% reduction in cost vs. full retraining |

As the data shows, the benefits are substantial and measurable, directly impacting both the quality of the AI's output and the operational efficiency of maintaining it.

Enhanced Trust and Cost Efficiency

Finally, RAG builds user trust while being incredibly smart from a business perspective. When a RAG-powered system gives you an answer, it can often cite the exact source it used. This transparency is huge—it lets users check the facts for themselves, building confidence in the AI's reliability.

On the business side, keeping the AI's knowledge fresh becomes ridiculously efficient. Instead of the costly, time-consuming nightmare of retraining a whole foundational model, you just update the documents in your knowledge base. It's that simple.

Major companies have reported that adding RAG not only boosted response accuracy but also cut down their model retraining costs by over 30%. It's a clear demonstration of RAG's impact on the bottom line. Exploring specific use cases is the best way to understand how techniques like RAG can truly power generative AI applications.

Real-World Examples Of RAG In Action

Alright, let's move away from the theory and look at where the rubber meets the road. Retrieval-augmented generation isn't just some concept floating around in a lab; it's actively solving real business problems across a ton of different industries right now.

By hooking up a language model to a curated, specific set of data, companies are building some seriously specialized and reliable AI tools. These examples show just how RAG can turn a generalist AI into a focused, in-house expert.

Intelligent Customer Support Bots

This is probably one of the most common places you'll see RAG in the wild. Forget those old, clunky chatbots that could only spit out scripted answers. Imagine a bot that has read—and instantly recalls—every single product manual, troubleshooting guide, and policy document your company has ever created.

A customer can ask something super specific, like, "How do I replace the filter on model X-45?" The RAG system doesn't guess. It zips over to the official manual, finds the exact paragraph, and uses that grounded information to generate a perfect, step-by-step answer. The results are pretty dramatic:

- Faster resolution times: Customers get what they need immediately, no waiting for a human agent to become available.

- Higher accuracy: Because the bot's answers are tied directly to official documentation, the risk of giving out bad information plummets.

- 24/7 availability: Expert-level help is always on, day or night.

This simple shift turns a basic FAQ bot into a genuinely useful technical assistant. It’s a win-win: customers are happier, and operational costs go down.

Internal Knowledge Management Systems

Big companies are notorious for having information silos. Critical data gets buried in a digital graveyard of thousands of reports, slide decks, and internal wikis that no one can ever find. RAG changes that by creating a single, smart search system that anyone in the company can talk to.

An employee can just ask a normal question in plain English, like, “What were our Q3 sales figures for the European market last year?” The RAG system will scan the relevant financial reports and internal summaries, pull the precise data, and give a direct answer, often with citations showing exactly where it got the information. This lets employees find what they need in seconds and make better decisions without having to dig through countless folders.

By unlocking the value trapped in internal documents, RAG effectively gives every employee a personal research assistant who has read every file the company owns.

For a closer look at more real-world applications and to get deeper into how these AI technologies are making an impact, you should check out sai-bot's blog for AI insights. It’s a great resource for seeing how different AI models and frameworks are actually being used in business today.

The Future Of Retrieval Augmented Generation

If you think Retrieval Augmented Generation is the final destination for AI, think again. It’s a massive leap forward, for sure, but it’s really just a critical stepping stone on a much longer journey. The future for this framework is incredibly bright, and it's already starting to push well beyond its current text-based world into something far more integrated and intelligent.

This evolution isn't some far-off concept; it's happening right now. While RAG feels like a brand-new idea, its roots go back decades. The real turning point, however, was in 2020 when Meta AI published its framework that fused retrieval with transformer models, setting the stage for the boom we're seeing today. You can actually dig into the historical evolution of RAG systems to see just how deep these foundations run.

This history is what makes what’s coming next so exciting.

Advancements In Multimodal RAG

The most thrilling frontier is easily Multimodal RAG. Very soon, these systems won't just be limited to pulling information from plain text. They'll be able to retrieve and make sense of data from a whole universe of sources.

Just imagine an AI that can:

- Analyze a video: You could ask it to summarize a dense, hour-long lecture or pinpoint the exact moment a specific concept was mentioned.

- Interpret images: You could have it generate a detailed explanation of a complex engineering diagram or compare the features of two product photos side-by-side.

- Understand audio: It could transcribe a podcast and pull out the most important insights, or give you the key takeaways from a recorded meeting.

Unlocking this kind of capability will open up a staggering number of new use cases. It will make AI assistants feel vastly more perceptive and genuinely useful because they'll finally understand the messy, diverse ways we actually store and share information.

Smarter Retrieval And Autonomous Agents

Beyond just handling different types of media, the core retrieval algorithms themselves are getting a major upgrade. The next generation of RAG will have a much better grasp of complex, nuanced queries. It will be able to distinguish the subtle differences in a user's intent to pull back information that’s not just relevant, but perfectly precise.

The ultimate goal is to move beyond simple question-answering and toward proactive problem-solving.

This is where things get really interesting, because it leads directly to integrating RAG into autonomous AI agents. These agents won't just answer questions; they'll use RAG to interact with live, real-time data streams to carry out complex, multi-step tasks.

Think about a RAG-powered agent monitoring financial market data to execute automated trading decisions, or one that actively manages a supply chain by reacting to live logistics reports. This isn't just a small improvement—it positions retrieval augmented generation as a foundational piece of technology that will shape how intelligent systems operate for years to come.

Common Questions About RAG

As you dig into retrieval-augmented generation, a few questions always seem to pop up. Answering them is a great way to get a solid handle on what RAG is, what it isn't, and where it fits in the wider world of AI. Let's walk through some of the most common ones.

RAG vs Fine-Tuning: What's The Difference?

The biggest difference between RAG and fine-tuning is how an AI model gets new information.

Fine-tuning is like sending a professional writer back to school to specialize in, say, medical writing. You're fundamentally changing the writer's internal knowledge by retraining them on a whole library of medical textbooks. The writer themselves is permanently altered.

RAG, on the other hand, doesn't change the writer at all. It just gives them a massive, perfectly organized reference library and an open-book policy. Before they write a single word, they can look up the most relevant facts for the task at hand. So, fine-tuning changes the model itself, while RAG changes the data the model has access to moment-to-moment.

A simple way to think about it: Fine-tuning is a permanent upgrade to the model's brain. RAG is like giving the model a super-fast, perfectly organized research assistant.

Can I Use RAG With Any Large Language Model?

For the most part, yes. And that’s one of its biggest strengths. RAG is a flexible framework, not a locked-in, proprietary model. You can think of it as a system where the Large Language Model is just one component—the "Generator"—that you can swap out as needed.

This gives you the freedom to choose the best engine for your specific job:

- GPT-4 for its muscle in complex reasoning.

- Llama 3 if you need the flexibility of an open-source model.

- Claude 3 when you're dealing with huge amounts of text.

This modular approach lets you balance performance, cost, and unique features, making RAG an incredibly adaptable tool for just about any project you can dream up.

What Are The Biggest Challenges When Implementing RAG?

While RAG is powerful, it’s not exactly plug-and-play. Getting it right usually comes down to tackling two major hurdles: data quality and retrieval relevance.

First, the old saying "garbage in, garbage out" has never been more true. The knowledge base you give your RAG system is its entire world. If your source documents are full of errors, are badly organized, or are just plain wrong, the AI’s answers will reflect that.

Second, making sure the retriever pulls the most relevant snippet of information for any given question is a serious engineering challenge. It's not enough to just find something related; it has to be the perfect context. Getting this wrong is the fastest way to lead the generator astray, causing it to produce answers that are vague, slightly off, or completely incorrect. This requires a ton of careful setup and constant tweaking to get right.